Busting FPGA Blockchain Myths Part 3: Importing Ethash Code

Importing Ethash code

Earlier in this article we built and benchmarked the Ethash algorithm, but we didn’t actually take a close look at the code. Should you take the time to inspect the benchmark code you would find that the following line calls the Ethash function:

ethash_full(&hash, full_mem, ¶ms, previous_hash, nonce);

This, in-turn, calls upon the Ethash library and falls upon the following function in libethash/internal.c:

static void ethash_hash(

ethash_return_value *ret,

node const *full_nodes,

ethash_cache const *cache,

ethash_params const *params,

const uint8_t header_hash[32],

const uint64_t nonce) {

...

This function is effectively a C representation of the PoW algorithm given in the Ethereum yellow paper. Programmers will take lite in the fact that the C code is significantly easier to follow than the paper. Additionally, there’s some architecture-specific code in the function that can be ignored for the sake of this exercise as well as some code that assumes the presence of cached data. Removing all such code simplified the function to a purely raw representation of the PoW algorithm as given below.

static void ethash_hash(

ethash_return_value *ret,

node const *full_nodes,

ethash_cache const *cache,

ethash_params const *params,

const uint8_t header_hash[32],

const uint64_t nonce) {

// pack hash and nonce together into first 40 bytes of s_mix

node s_mix[MIX_NODES + 1];

memcpy(s_mix[0].bytes, header_hash, 32);

s_mix[0].double_words[4] = nonce;

// compute sha3-512 hash and replicate across mix

SHA3_512(s_mix->bytes, s_mix->bytes, 40);

node *const mix = s_mix + 1;

for (unsigned w = 0; w != MIX_WORDS; ++w) {

mix->words[w] = s_mix[0].words[w % NODE_WORDS];

}

unsigned const

page_size = sizeof(uint32_t) * MIX_WORDS,

num_full_pages = (unsigned) (params->full_size / page_size);

for (unsigned i = 0; i != ACCESSES; ++i) {

uint32_t const index = ((s_mix->words[0] ^ i) * FNV_PRIME ^ mix->words[i % MIX_WORDS]) % num_full_pages;

for (unsigned n = 0; n != MIX_NODES; ++n) {

const node *dag_node = &full_nodes[MIX_NODES * index + n];

for (unsigned w = 0; w != NODE_WORDS; ++w) {

mix[n].words[w] = fnv_hash(mix[n].words[w], dag_node->words[w]);

}

}

}

// compress mix

for (unsigned w = 0; w != MIX_WORDS; w += 4) {

uint32_t reduction = mix->words[w + 0];

reduction = reduction * FNV_PRIME ^ mix->words[w + 1];

reduction = reduction * FNV_PRIME ^ mix->words[w + 2];

reduction = reduction * FNV_PRIME ^ mix->words[w + 3];

mix->words[w / 4] = reduction;

}

memcpy(ret->mix_hash, mix->bytes, 32);

// final Keccak hash

SHA3_256(ret->result, s_mix->bytes, 64 + 32); // Keccak-256(s + compressed_mix)

}

Now a naïve approach would simply copy this code and use it to replace the kernel code in our krnl_vadd.cl file. Although this is the right idea, a flood of compilation errors would quickly reveal some flaws to this including:

- The code makes extensive use of macros and helper functions that are defined in separate libethash files.

- Some standard C coding practices aren’t necessarily acceptable to OpenCL (e.g. memcpy).

- The arguments used by this function don’t match the arguments from the ‘vadd’ example and in the case of OpenCL argument, passing requires some special handling (i.e. you can’t just redefine the function prototype).

But overall, the work required is not that bad:

- Add all dependent macros and helper functions to the kernel *.cl file.

- Replace all instances of memcpy as well as any datatypes not natively supported by OpenCL

- Update the host *.cpp code to accommodate appropriate arguments

Consolidating Code Into Single Kernel File

The PoW function makes extensive use of SHA-3 (Secure Hash Algorithm 3). This is a standard function specified by NIST (National Institute of Standards and Technology) and the actual algorithm originates from a hashing function known as Keccak. Inside libethash the particular implementation of Keccak used by the PoW is supplied in the sha3.c/h files.

In order for our Ethash function to run as an individual kernel we’ll need to pull in all this supporting SHA-3 code. There are also several macro definitions spread across the header files ethash.h, fnv.h, and internal.h. Consolidating all this code into a single file results in the following:

https://github.com/mkycrb/ethash/blob/master/c/ethash.c

We can now copy this code into the exiting krnl_vadd.cl file, while maintaining the OpenCL kernel attribute. The initial import will look like this:

https://github.com/mkycrb/ethash/blob/fc1419c1ed0cebc0868c6b80ff046a7da8bb94d3/ocl/krnl_ethash.cl

As mentioned earlier, this will definitely not compile as there are several aspects of the code that are not OpenCL compliant. So, we correct this mostly by replacing some datatypes and removing calls to standard libc functions. The arguments of the kernel are also updated to fit the OpenCL framework; that is, large data shared between the host and kernel is marked as global. The resulting changes can be seen here:

Now that the kernel is in good shape we just need to update the host code to pass in the appropriate data. This is a good time to review the arguments a little.

- ethash_return_value: The PoW actually returns two hash values so the original code used a special structure to hold these. In the OpenCL these are simply split out into two 32-byte hash values ret_mix & ret_hash.

- full_nodes: This is the core data that the PoW function works with. It is a large dataset (>1GB) of hashes that can be reproduced consistently by any mining computer. The PoW creates hashes based on pseudo-random chunks of data from this dataset. This dataset is also referred to as the DAG, after Dagger Hashimoto (a hashing algorithm that contributed to how the DAG is generated).

- ethash_params: This contained information about the size of some data, including the DAG. To simplify things for now we discard this argument and test on a fixed size DAG.

- header_hash: This is a 32-byte hash representing the previous block in a block-chain. For the sake of testing, this is just an arbitrary value since we not actually interfacing with the live blockchain.

- nonce: This is just a random integer-valued seed. When the PoW is used to mine, it iterates over changing seed values until is produces a hash that meets certain criteria. For the sake of testing, we’ll just fix this to zero.

So as can be seen from above, the only argument we have to worry about is the DAG. The rest are fairly arbitrary and easy to define in code.

Generating the DAG

In the benchmark code that was run earlier the DAG was generated in memory prior to the Ethash function being called, but you may also recall that this took over 10mins. Re-generating the DAG every time we want to test the PoW function is not very practical so we will dump the value to a file and then add code to the host program to read it in.

To save the DAG to a file a simple Python script was used. You may recall that several languages were supported for the Ethash library, including Python. So for this exercise, we simply went back and built the Python module (installing any required dependencies) and then ran the script below.

import pyethash

with open("cache","rb") as fcache:

cache = fcache.read()

# Using same block 0 from benchmark code

data_size = pyethash.get_full_size(0)

print("Generating dataset (DAG) of size %d." % data_size)

dataset = pyethash.calc_dataset_bytes(data_size, cache)

with open("dataset","w+b") as fcache:

fcache.write(dataset)

As mentioned earlier, the size of the DAG can vary. It’s size actually depends upon an Ethereum property called ‘epoch’ which increases with time. For the sake of testing we’ll use the smallest possible DAG (1GB) by selecting an epoch of zero.

Update Host Code

The primary changes needed for the host code are an update to the arguments passed to the kernel and the initialization of these arguments. A summary of these changes can be seen here:

https://github.com/mkycrb/ethash/commit/6b9be20600b51e3357fb7e9331831a0f474991ab

Note that some helper functions were also included (originally from Ethash library) to convert between hexadecimal strings and binary data.

It should also be noted that the value selected for the “header_hash” was not entirely arbitrary. We chose the same value used in the benchmark test so that we can compare results. This holds true for the “nonce” as well which is zero in both cases.

The code should now be ready to compile and test! The state of all the files can be viewed here:

https://github.com/mkycrb/ethash/tree/Emulation-SW-v1

Building and Testing

When a new project is created in the Vitis tool it comes with 3 build & run configurations:

- Emulation-SW: Allows you to build and run a kernel in the development environment without using or emulating an Alveo board. When running, the kernel will just execute like native code on the host.

- Emulation-HW: This will start building the kernel for the targeted Alveo board but only to the extent that is required to emulate functionality when running. This will provide performance estimates.

- System: This will do a complete build of the kernel for the targeted Alveo board and can take substantially more time than either Emulation build and a run will require an actual board.

It’s best to step through each of these in order when attempting to build/compile code. A build and/or run in an earlier stage does not guarantee success in the next stage because the requirements become more stringent and validation more extensive with each stage.

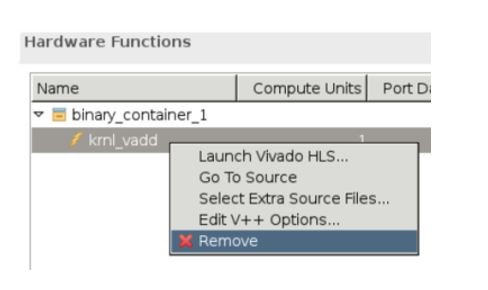

The example ‘vadd’ project was already configured to build the kernel for an Alveo board, but since we changed the name of the kernel we’ll need to update this. In the Application Project Settings window, all the Hardware Functions are listed. The ‘krnl_vadd’ can be selected and removed. For example:

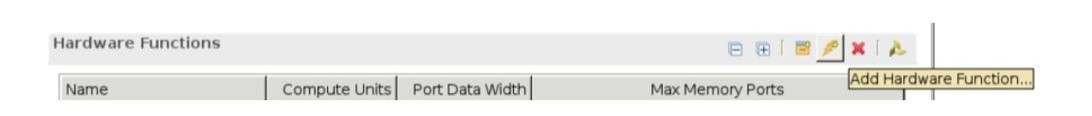

Now we add a new kernel (see below) and select our ‘krnl_ethash’ from the list that’s presented. This brings our project to a state that should be ready to build.

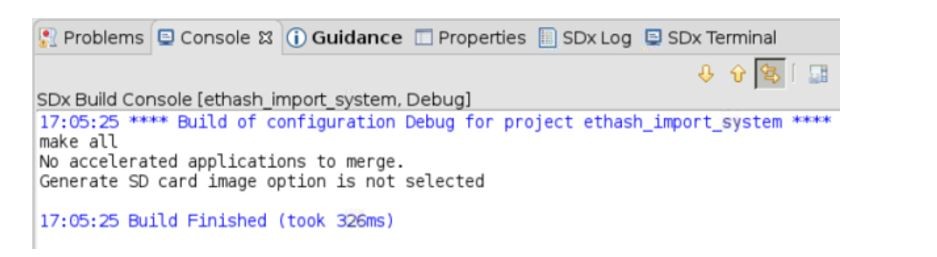

By default, new projects are configured for “Emulation-SW” so we can just go ahead and build the code (e.g. Project > Build Project). The build process is logged in the Console tab and the final output should confirm a successful build. For example:

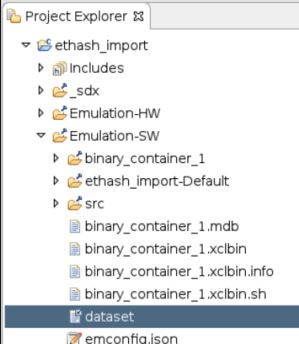

Now’s a good time to run the code and just confirm that it’s still doing what we expect after all the important changes. Note the code assumes the availability of the DAG file in the Emulation-SW folder of the project (named ‘dataset’ in the screenshot below). This is simply the file generated by the Python script covered earlier.

This is also a good time to review the two key output products of the build process. Also located in the applicable Emulation-* folder is a *.xclbin file and a *.exe file. The *.xclbin file represents the kernel. At this stage of software emulation, its contents are far from its final format, but eventually, this will be the file actually loaded into the Alveo board. This file will customize the board to run the applicable kernel in hardware. The *.exe file represents the host code that will eventually run on the system hosting the Alveo board. At a minimum, it will take the *.xclbin file as an argument since it will be responsible for loading the kernel.

Running the code (e.g. Run > Run) should print some output on the Console window. This includes confirmation that the *.xclbin was loaded, confirmation that the DAG was loaded, and then finally the results of running Ethash as shown below.

Loading: '../binary_container_1.xclbin'

dag loaded

mix: a3676e668d4a4a9de2d4688dfd9cc84c65464990fc58f2885e1b8f8bfd028b5a

hsh: a7ea1de3a8007134900cd2c86f7e55af68a1d3e4537438a0a966b6cbafa23c90

The output shows both hashes returned by the PoW, but if we look at the last ‘hsh’ value and compare it with the output from running the benchmark program on a PC we see that they match. That confirms the code is still functionally correct!

Estimating Performance

Now we will move to the next stage of building/running: hardware emulation. Switching to this configuration can be done from a drop-down menu at the top-right of the Application Project Settings as shown below.

Now we simply build and run again, just as we did for software emulation. The difference, however, is that after running a hardware emulation will view some reports that estimate performance. In fact, there are several reports available, but here we will look like just two:

- Profile Summary

- Application Timeline

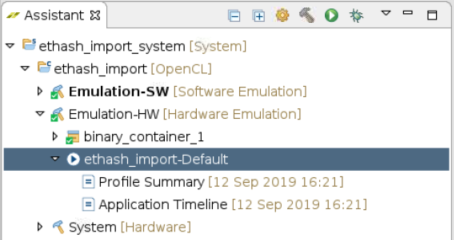

Although these reports are available within the project folders, the Assistant window is provided for easy access to all reports. An example of the two we’ll look at here is given below. Note that these two particular reports will only become available after both building and running the application.

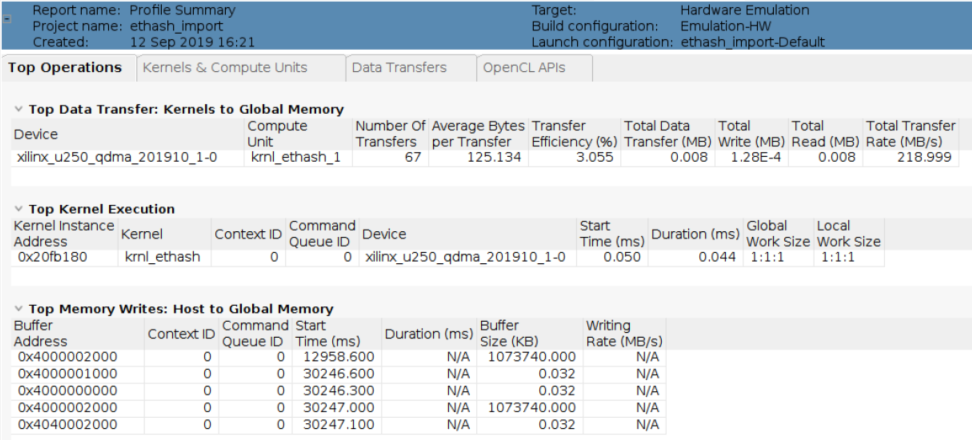

Opening the Profile Summary will provide something similar to the screenshot below.

It’s important to remember that these are just estimates since nothing was run on real hardware, but they none-the-less provide some nice sanity checks that the system is working as expected. For example, we see that the kernel incurred the highest number of data transfers (67 in this case), which makes sense because the PoW is intended to be heavily memory bound. Meaning it’s accessing memory a lot (making this a limiting factor for performance) as it grabs and processes chunks of the DAG. We can also see the large memory transfer of the DAG from host memory to (global) on-board memory at address 0x4000002000. And again, don’t be discouraged by the skewed emulation times for data transfers. The reported time of almost 13s to transfer 1GB of data is a far cry from what will actually happen on hardware.

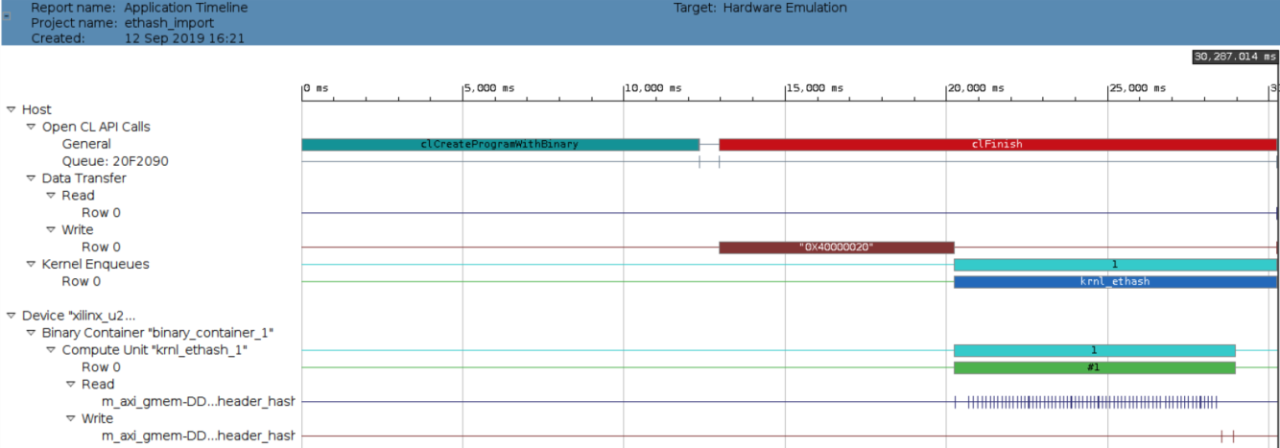

Next, we’ll look at the Application Timeline, which really helps visualize the various operations that take place to run the application. Below is an example screenshot:

Here the top-left most bar represents the loading of the kernel. Next, at around center, we see the DAG getting loaded. Then immediately following the DAG are several bars representing different aspects of the kernel executing. Near the bottom, we can also see a multitude of pulses representing dispersed memory reads as the kernel fetches data from the DAG.

This is a great exercise to confirm that the algorithm in functionally correct and the hardware will do as is expected, but it also demonstrates that running the code as-is is not an ideal solution for the sake of acceleration. For example, the report indicates a relatively long latency estimate (which tends to be ball-park accurate) for the kernel at 0.044ms and the memory utilization is very low at just 1.872% (as taken from the Data Transfers tab of the Profile Summary). This is because we took C code designed to run in a fairly serial manner on CPU clocked at a very high frequency and applied it to an FPGA, which is very good at leveraging parallelism, and ran it in a still serial manner at a much lower clock rate (300MHz).

None-the-less, we have a working kernel to use as a starting point towards additional optimizations that can yield higher levels of memory utilization and parallelism. A nice exercise for the interested reader! …or maybe a future article.

In the meantime, let's take what we have and run it on real hardware.

Read Busting FPGA Blockchain Myths Part 4: Deploying the Application

About Shaun Purvis

Shaun Purvis is a Processor Specialist Field Application Engineer (FAE) covering Eastern Canada. He works across a variety of industries, including Wired/Wireless Communications, Audio/Video Broadcast, and Industrial Vision, supporting embedded applications as well as artificial intelligence (AI) solutions. Prior to AMD Shaun worked at a consulting company as an ARM processor and AMD SoC specialist where he did a lot of globetrotting and training as the embedded industry embraced these technologies. Shaun grew up on the West Coast of BC, graduated from McGill University, and started his professional career in California. He now lives with his family in Montreal where he enjoys scaling up mountains in summer and sliding down them in winter.