Using Alveo Data Center Accelerator Cards in a KVM Environment

Overview

In a data center environment, there are multiple options to provide orchestration of resources. One example would be using containers and Kubernetes as orchestrators. To use Alveo in such an environment, https://developer.xilinx.com/en/articles/using-alveo-in-a-kubernetes-environment.html provides the info and examples on how to deploy such.

Another mechanism is to use Virtualization. A well know technology for this would be KVM (https://www.linux-kvm.org/page/Main_Page). In this article, I will show how to deploy Alveo accelerator cards in a KVM environment.

Local KVM Setup

This article is not meant to be an in-depth tutorial on how to install and configure KVM, but in this section, I will provide a short overview of how to provision your server.

As an example host environment, I have installed Ubuntu 18.04 LTS on a Dell R740 server.

I installed and configured KVM, following this guide more or less: https://fabianlee.org/2018/08/27/kvm-bare-metal-virtualization-on-ubuntu-with-kvm/

Installing a KVM

First, install KVM and assorted tools:

sudo apt-get install qemu-system-x86 qemu-kvm qemu libvirt-bin virt-manager virtinst bridge-utils cpu-checker virt-viewer

Then validate that that KVM was installed and that the CPU has VT-x virtualization enabled with kvm-ok.

$ sudo kvm-ok

INFO: /dev/kvm exists

KVM acceleration can be used

If you instead get a message that looks like below, then go in at the BIOS level and enable VT-x.

INFO: /dev/kvm does not exist

HINT: sudo modprobe kvm_intel

INFO: Your CPU supports KVM extensions

INFO: KVM (vmx) is disabled by your BIOS

HINT: Enter your BIOS setup and enable Virtualization Technology (VT),

and then hard poweroff/poweron your system

KVM acceleration can NOT be used

Then run the virt-host-validate utility to run a whole set of checks against your virtualization ability and KVM readiness.

$ sudo virt-host-validate

QEMU: Checking for hardware virtualization : PASS

QEMU: Checking if device /dev/kvm exists : PASS

QEMU: Checking if device /dev/kvm is accessible : PASS

QEMU: Checking if device /dev/vhost-net exists : PASS

QEMU: Checking if device /dev/net/tun exists : PASS

QEMU: Checking for cgroup 'memory' controller support : PASS

QEMU: Checking for cgroup 'memory' controller mount-point : PASS

QEMU: Checking for cgroup 'cpu' controller support : PASS

QEMU: Checking for cgroup 'cpu' controller mount-point : PASS

QEMU: Checking for cgroup 'cpuacct' controller support : PASS

QEMU: Checking for cgroup 'cpuacct' controller mount-point : PASS

QEMU: Checking for cgroup 'cpuset' controller support : PASS

QEMU: Checking for cgroup 'cpuset' controller mount-point : PASS

QEMU: Checking for cgroup 'devices' controller support : PASS

QEMU: Checking for cgroup 'devices' controller mount-point : PASS

QEMU: Checking for cgroup 'blkio' controller support : PASS

QEMU: Checking for cgroup 'blkio' controller mount-point : PASS

QEMU: Checking for device assignment IOMMU support : PASS

LXC: Checking for Linux >= 2.6.26 : PASS

LXC: Checking for namespace ipc : PASS

LXC: Checking for namespace mnt : PASS

LXC: Checking for namespace pid : PASS

LXC: Checking for namespace uts : PASS

LXC: Checking for namespace net : PASS

LXC: Checking for namespace user : PASS

LXC: Checking for cgroup 'memory' controller support : PASS

LXC: Checking for cgroup 'memory' controller mount-point : PASS

LXC: Checking for cgroup 'cpu' controller support : PASS

LXC: Checking for cgroup 'cpu' controller mount-point : PASS

LXC: Checking for cgroup 'cpuacct' controller support : PASS

LXC: Checking for cgroup 'cpuacct' controller mount-point : PASS

LXC: Checking for cgroup 'cpuset' controller support : PASS

LXC: Checking for cgroup 'cpuset' controller mount-point : PASS

LXC: Checking for cgroup 'devices' controller support : PASS

LXC: Checking for cgroup 'devices' controller mount-point : PASS

LXC: Checking for cgroup 'blkio' controller support : PASS

LXC: Checking for cgroup 'blkio' controller mount-point : PASS

LXC: Checking if device /sys/fs/fuse/connections exists : PASS

If you don't see PASS for each test, you should fix your setup first. On Ubuntu 18.04 LTS, IOMMU is not enabled by default, so that test would initially fail. To enable this, modify the /etc/default/grub file:

sudo vim /etc/default/grub

and add/modify the GRUB_CMDLINE_LINUX entry:

GRUB_CMDLINE_LINUX="intel_iommu=on"

Apply this configuration as follows

sudo update-grub

And then reboot the machine.

Add user to libvirt groups

To allow the current user to manage the guest VM without sudo, we can add ourselves to all of the libvirt groups (e.g. libvirt, libvirt-qemu) and the kvm group.

cat /etc/group | grep libvirt | awk -F':' {'print $1'} | xargs -n1 sudo adduser $USER

# add user to kvm group also

sudo adduser $USER kvm

# relogin, then show group membership

exec su -l $USER

id | grep libvirt

Group membership requires a user to log back in, so if the “id” command does not show your libvirt* group membership, logout and log back in, or try “exec su -l $USER“.

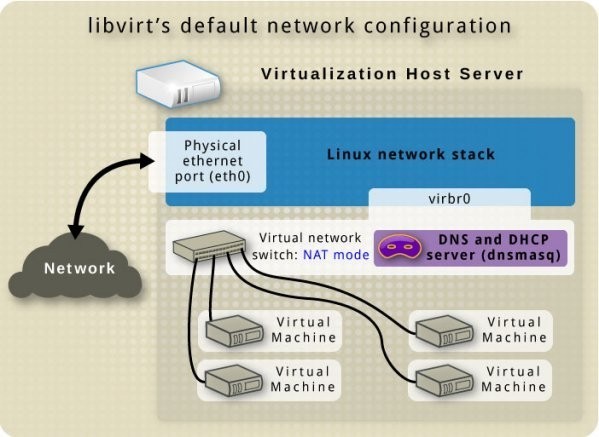

Network Configuration

By default, KVM creates a virtual switch that shows up as a host interface named “virbr0” using 192.168.122.0/24. The image below is courtesy of libvirt.org.

This interface should be visible from the Host using the “ip” command below.

~$ ip addr show virbr0

12: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:34:3e:8f brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

Create basic VM using virt-install

In order to test you need an OS boot image. Since we are on an Ubuntu host, let’s download the ISO for the network installer of Ubuntu 18.04. This file is only 76Mb, so it is perfect for testing. When complete, you should have a local file named “~/Downloads/mini.iso”

First list what virtual machines are running on our host:

Note: We are using the option --machine q35 to create VM's, because for some more advanced Alveo use cases, the default i440fx machine type which is a pci machine won't work. The q35 machine is a pcie machine allowing us to do firmware updates, support DFX platforms, etc, from a VM directly

# list VMs

virsh list

This should return empty as we haven't created any guest yet. Create your first guest VM with 1 vcpu/1G RAM using the default virbr0 NAT network and default pool storage.

$ virt-install --virt-type=kvm --name=ukvm1404 --ram 1024 --vcpus=1 --virt-type=kvm --hvm --cdrom ~/faas/mini.iso --network network=default --disk pool=default,size=20,bus=virtio,format=qcow2 --noautoconsole --machine q35

WARNING No operating system detected, VM performance may suffer. Specify an OS with --os-variant for optimal results.

Starting install...

Allocating 'ukvm1404.qcow2' | 20 GB 00:00:00

Domain installation still in progress. You can reconnect to

the console to complete the installation process.

# open console to VM

$ virt-viewer ukvm1404

“virt-viewer” will popup a window for the Guest OS, when you click the mouse in the window and then press <ENTER> you will see the initial Ubuntu network install screen.

If you want to delete this guest OS completely, close the GUI window opened with virt-viewer, then use the following commands:

virsh destroy ukvm1404

virsh undefine ukvm1404

Test from GUI

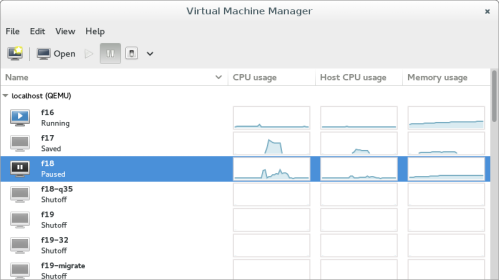

The virt-viewer utility will open a basic window to the guest OS, but notice it does not give any control besides sending keys. If you want a full GUI for managing KVM, I would suggest using “virt-manager“.

To install and start virt-manager:

sudo apt-get install qemu-system virt-manager

virt-manager

virt-manager provides a convenient interface for creating or managing a guest OS, and any guest OS you create from the CLI using virt-install will show up in this list also.

Note: When creating VM's using virt-manager, make sure to also select q35 as the machine type for full support of pcie in your guests.

Configuring an Alveo Device Passthrough

Once one or multiple VM's have been created, we can now go ahead and assign Alveo cards to them. More information on different use models can be found https://xilinx.github.io/XRT/master/html/security.html#deployment-models.

Install Xilinx Runtime

The first step to provide our system would be to install the XRT. The latest version for your Alveo card can be found here: https://www.xilinx.com/support/download/index.html/content/xilinx/en/downloadNav/alveo.html.

Since I don't plan on doing any deployment or development on my native OS, I don't need to install any shells there, only the XRT is needed.

The XRT for my machine can be downloaded and installed as follows:

wget https://www.xilinx.com/bin/public/openDownload?filename=xrt_202020.2.9.317_18.04-amd64-xrt.deb

sudo apt install ./xrt_202020.2.9.317_18.04-amd64-xrt.deb

After the installation, a reboot might be required. We can then list the Alveo cards that are installed in the system by using the XRT tools. For example, on my system, I have 2 cards installed:

$/opt/xilinx/xrt/bin/xbmgmt scan

*0000:af:00.0 xilinx_u280-es1_xdma_201910_1(ts=0x5d1c391d) mgmt(inst=44800)

*0000:3b:00.0 xilinx_u200_xdma_201830_2(ts=0x5d1211e8) mgmt(inst=15104)

Using PCIe Passthrough

Note: This section describes the pcie passthrough configuration, and how to manually set this up. Below, I've described how to automate this by creating some scripts.

Most Alveo cards expose 2 physical functions on the PCIe interface. Since in this article, we want to provide full control to the guest OS for a device, we need to pass through both functions. This can be done using the virsh command. First, we need to create 2 files, one for each of the physical functions.

Note: The passthrough config below is only for the newer q35 machine type. The older machine type i440fx does not support all features needed for Alveo. In the scripts pointed to below, support for i440fx is still available for backwards compatibility.

As an example, these files can be used to pass through the U200 on my system, installed in pcie slot 0x3b:

$ cat pass-mgmt.xml

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x3b' slot='0x00' function='0x0'/>

</source>

<address type='pci' domain='0x0000' bus='0x3b' slot='0x00' function='0x0' multifunction='on'/>

</hostdev>

$ cat pass-user.xml

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x3b' slot='0x00' function='0x1'/>

</source>

<address type='pci' domain='0x0000' bus='0x3b' slot='0x00' function='0x1'/>

</hostdev>

With these files, virsh can now be used to pass through the U200 card to an existing VM. There are multiple options, but the way done here, will allow devices to be used in the guest OS only after a reboot. In my system, I have a VM named centos7.5, so let's attach the U200 to that domain:

$ virsh attach-device centos7.5 --file pass-user.xml --config

Device attached successfully

$ virsh attach-device centos7.5 --file pass-mgmt.xml --config

Device attached successfully

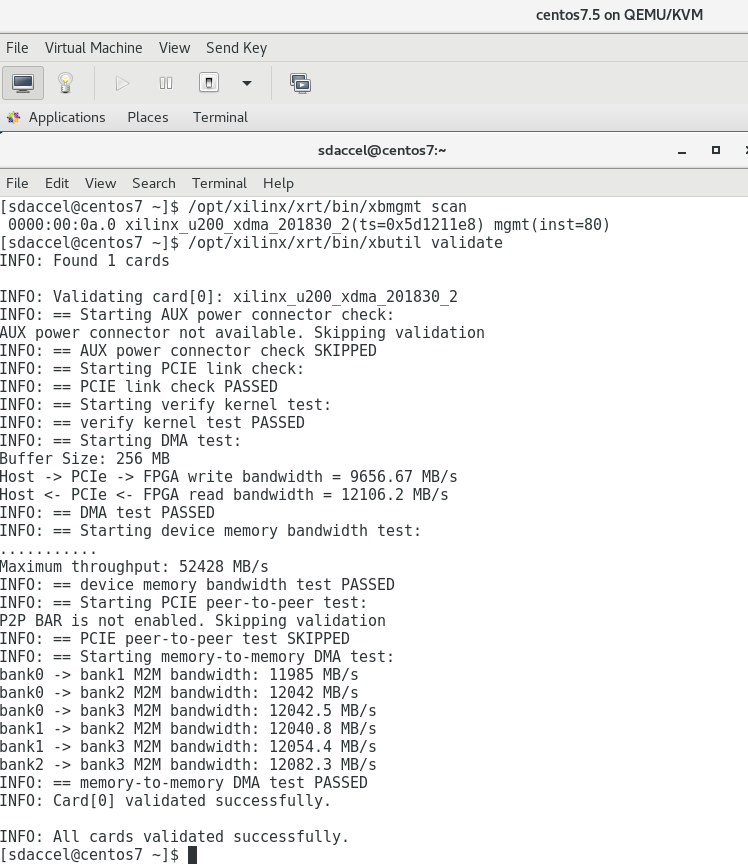

When booting the centos7.5 VM, if we have the correct XRT and Shell installed, we can now use the U200 directly:

Similarly to attaching devices, the same xml files can be used to detach devices again:

$ virsh detach-device centos7.5 --file pass-user.xml --config

Device detached successfully

$ virsh detach-device centos7.5 --file pass-mgmt.xml --config

Device detached successfully

Convenience scripts to attach/detach Alveo Cards

Note: This section explains some scripts to make pcie passthrough easier to use. The latest version of these scripts can be found at https://github.com/Xilinx/kvm_helper_scripts

To make it more convenient to attach and detach Alveo cards to different VM's, I've created a script to automate this as much as possible. I, therefore, created 2 helper xml files:

$ cat pass-user.xml_base

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x$DEV' slot='0x00' function='0x1'/>

</source>

<address type='pci' domain='0x0000' bus='0x$GUESTBUS' slot='0x$SLOT' function='0x1'/>

</hostdev>

$ cat pass-mgmt.xml_base

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x$DEV' slot='0x00' function='0x0'/>

</source>

<address type='pci' domain='0x0000' bus='0x$GUESTBUS' slot='0x$SLOT' function='0x0' multifunction='on'/>

</hostdev>

With those, I created a script attach.sh as follows:

#!/bin/bash

if [ $# -lt 2 ]; then

echo "Usage: $0 [-u] [-t] <OS name> <pcie slot>"

echo " -u: only map user function"

echo " -t: keep temp files"

echo "For example: $0 centos7.5 af"

echo

echo "OS list:"

virsh list --all

echo

echo "========================================================"

echo

echo "Xilinx devices:"

[ -f /opt/xilinx/xrt/bin/xbmgmt ] && /opt/xilinx/xrt/bin/xbmgmt scan || lspci -d 10ee:

echo

echo

echo "========================================================"

echo

for dev in $(virsh list --all --name); do

devices=$(virsh dumpxml $dev | grep '<hostdev' -A 5 | grep "function='0x1'" | grep -v "type" | tr -s ' ' | cut -d' ' -f4 | cut -d= -f2 | awk '{print substr($0,4,2);}')

if [[ ! -z "$devices" ]]; then

echo "Attached host devices in $dev:"

echo $devices

echo

fi

done

exit -1

fi

KEEP_TEMP=0

MAP_MGMT=1

if [ "$1" = "-u" ]; then

MAP_MGMT=0

shift

fi

if [ "$1" = "-t" ]; then

KEEP_TEMP=1

shift

fi

export OS=$1

export DEV=$2

#not sure what to pick for SLOT/BUS on guest.

#Testing shows that on q35 systems, SLOT 0x00 works, with BUS number the unique device number of the pci on the host

#On i440fx we need to use bus 0x00, and we use a unique slot which is the device number on the host

SLOT=$2

GUESTBUS="00"

if $(virsh dumpxml $OS | grep q35 &> /dev/null); then

SLOT="00"

GUESTBUS=$2

fi

export SLOT

export GUESTBUS

CMD=$(basename $0)

COMMAND=${CMD%.sh}

if [ $MAP_MGMT -eq 1 ]; then

envsubst < pass-mgmt.xml_base > pass-mgmt-$DEV-$OS.xml

virsh $COMMAND-device $OS --file pass-mgmt-$DEV-$OS.xml --config

fi

envsubst < pass-user.xml_base > pass-user-$DEV-$OS.xml

virsh $COMMAND-device $OS --file pass-user-$DEV-$OS.xml --config

if [ $KEEP_TEMP -eq 0 ]; then

rm -f pass-mgmt-$DEV-$OS.xml pass-user-$DEV-$OS.xml

fi

This script should be called attach.sh, and you should create a symbolic link to detach.sh to utilize it:

$ ls -al attach.sh detach.sh

-rwxrwxr-x 1 alveo alveo 1159 Mar 20 10:02 attach.sh

lrwxrwxrwx 1 alveo alveo 9 Mar 20 12:15 detach.sh -> attach.sh

When executed without any commands, the script will output the existing domains (VM's), the Alveo cards installed in the system, and which Alveo cards are attached to which VM's. For example:

$ ./attach.sh

Usage: ./attach.sh <OS name> <pcie slot>

For example: ./attach.sh centos7.5 af

OS list:

Id Name State

----------------------------------------------------

- centos7.5 shut off

- ubuntu18.04 shut off

========================================================

Xilinx devices:

*0000:af:00.0 xilinx_u280-es1_xdma_201910_1(ts=0x5d1c391d) mgmt(inst=44800)

*0000:3b:00.0 xilinx_u200_xdma_201830_2(ts=0x5d1211e8) mgmt(inst=15104)

========================================================

Attached host devices in centos7.5:

3b

af

So as we can see here, I have two VM's on my system (centos7.5 and ubuntu18.04), but none of them is running. There are 2 Alveo cards installed in my server (U200 in slot 0000:3b:00.0 and U280 in slot 000:af:00.0). Both have been attached to the centos7.5 VM. To attach or detach an Alveo card, you can use 'attach.sh <OS name> <pcie slot>'. We can for example attach the U200 to the ubuntu18.04 machine as well:

$ ./attach.sh ubuntu18.04 3b

Device attached successfully

Device attached successfully

$ ./attach.sh

Usage: ./attach.sh <OS name> <pcie slot>

For example: ./attach.sh centos7.5 af

OS list:

Id Name State

----------------------------------------------------

- centos7.5 shut off

- ubuntu18.04 shut off

========================================================

Xilinx devices:

*0000:af:00.0 xilinx_u280-es1_xdma_201910_1(ts=0x5d1c391d) mgmt(inst=44800)

*0000:3b:00.0 xilinx_u200_xdma_201830_2(ts=0x5d1211e8) mgmt(inst=15104)

========================================================

Attached host devices in centos7.5:

3b

af

Attached host devices in ubuntu18.04:

3b

Note: Devices can be attached to multiple VMs, but these VMs cannot run simultaneously

Likewise, to detach a device, the syntax is 'detach.sh <OS name> <pcie slot>'. So to detach the U200 from the centos7.5 VM, this can be used:

$ ./detach.sh centos7.5 3b

Device detached successfully

Device detached successfully

$ ./attach.sh

Usage: ./attach.sh <OS name> <pcie slot>

For example: ./attach.sh centos7.5 af

OS list:

Id Name State

----------------------------------------------------

- centos7.5 shut off

- ubuntu18.04 shut off

========================================================

Xilinx devices:

*0000:af:00.0 xilinx_u280-es1_xdma_201910_1(ts=0x5d1c391d) mgmt(inst=44800)

*0000:3b:00.0 xilinx_u200_xdma_201830_2(ts=0x5d1211e8) mgmt(inst=15104)

========================================================

Attached host devices in centos7.5:

af

Attached host devices in ubuntu18.04:

3b

I also created auto-completion support for this script. By creating a file autocomplete_faas.sh, with this content

_faas()

{

local cur prev hosts devices suggestions device_suggestions

COMPREPLY=()

cur="${COMP_WORDS[COMP_CWORD]}"

prev="${COMP_WORDS[COMP_CWORD-1]}"

hosts="$(virsh list --all --name)"

devices="$(lspci -d 10ee: | grep \\.0 | awk '{print substr($0,0,2);}' | tr '\n' ' ' | head -c -1)"

case $COMP_CWORD in

1)

COMPREPLY=( $(compgen -W "${hosts}" -- ${cur}) )

return 0

;;

2)

if [ "${COMP_WORDS[0]}" == "./detach.sh" ]; then

# only return attached devices

devices=$(virsh dumpxml $prev | grep '<hostdev' -A 5 | grep "function='0x1'" | grep -v "type" | tr -s ' ' | cut -d' ' -f4 | cut -d= -f2 | awk '{print substr($0,4,2);}')

fi

suggestions=( $(compgen -W "${devices}" -- ${cur}) )

;;

esac

if [ "${#suggestions[@]}" == "1" ] || [ ! -f /opt/xilinx/xrt/bin/xbutil ] ; then

COMPREPLY=("${suggestions[@]}")

else

# more than one suggestions resolved,

# respond with the full device suggestions

declare -a device_suggestions

for ((dev=0;dev<${#suggestions[@]};dev++)); do

#device_suggestions="$device_suggestions\n$dev $(/opt/xilinx/xrt/bin/xbutil scan | grep ":$dev:")"

device_suggestions+=("${suggestions[$dev]}-->$(/opt/xilinx/xrt/bin/xbutil scan | grep ":${suggestions[$dev]}:" | xargs echo -n)")

done

COMPREPLY=("${device_suggestions[@]}")

fi

}

complete -F _faas ./attach.sh

complete -F _faas ./detach.sh

And then sourcing this file

source ./autocomplete.sh

You can then use auto-completion using TAB for both the VM name and the device

Conclusion

This article shows how to use Alveo data center accelerator cards in a KVM environment. Individual Alveo cards can be attached and detached from VMs dynamically, and thus can be used in these different VMs as they were running natively on the server.

About Kester Aernoudt

Kester Aernoudt received his masters degree in Computer Science at the University of Ghent in 2002. In 2002 he started as a Research Engineer in the Technology Center of Barco where he worked on a wide range of processing platforms such as microcontrollers, DSP's, embedded processors, FPGA's, Multi Core CPU's, GPU's etc. Since 2011 he joined AMD, now working as a Processor Specialist covering Europe and Israel supporting customers and colleagues on Embedded Processors, X86 Acceleration, specifically targeting our Zynq Devices and Alveo.