Vitis AI

Optimal Artificial Intelligence Inference from Edge to Cloud

The Vitis™ AI software is a comprehensive AI inference development solution for AMD devices, boards, Alveo™ data center acceleration cards, select PCs, laptops and workstations. It consists of a rich set of AI models, optimized deep learning processor unit (DPU) cores, tools, libraries, and example designs for AI at the edge, endpoints, and in the data center. It is designed with high efficiency and ease of use in mind, unleashing the full potential of AI acceleration on AMD Adaptive SoCs and RyzenTM AI powered PCs.

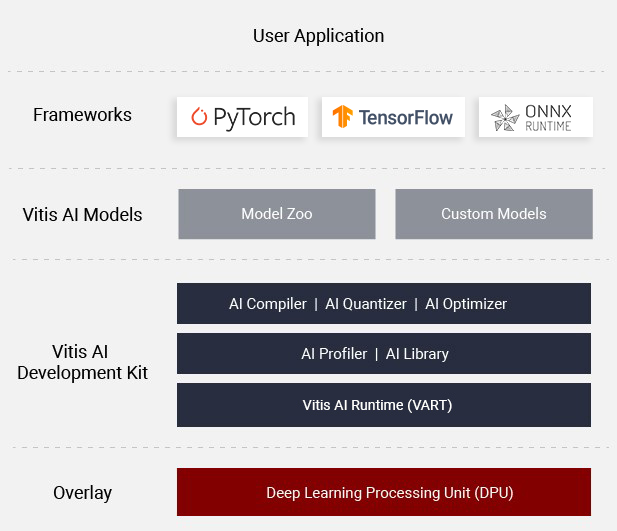

Figure 1 - Vitis AI Structure

How your development works with the Vitis AI Software:

- Support for mainstream frameworks and the latest models capable of diverse deep learning inference, CNN and Generative Large Language Models

- Powerful quantizer and optimizer tools for optimal model accuracy and processing efficiency

- Easy compilation flow and high-level APIs to achieve the fastest deployment of custom models

- Highly efficient and configurable DPU cores to meet different needs for throughput, latency, and power at the edge, endpoints, and in the cloud

Explore All the Possibilities with Vitis AI

Figure 2 - Model Zoo

AI Model Zoo

AI model zoo is open to all users with rich and off-the-shelf deep learning models in PyTorch, TensorFlow and ONNX. AI Model Zoo provides optimized and retrainable AI models that enable faster execution, performance acceleration, and production on AMD platforms.

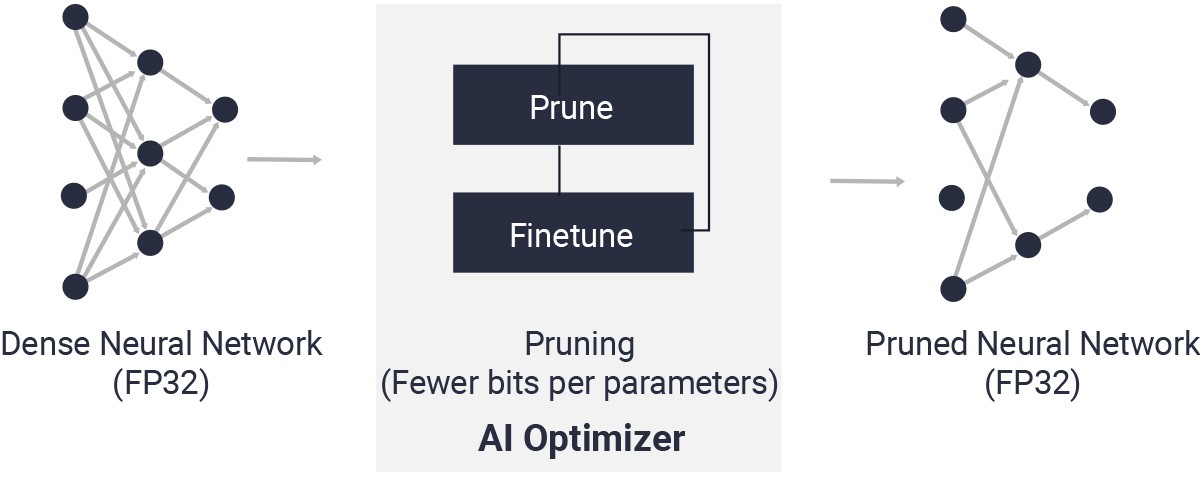

AI Optimizer

With exceptional model compression technology, the AI optimizer reduces model complexity by 5X to 50X with minimal accuracy impact. Deep compression takes the performance of your AI inference to the next level.

Figure 3 - Vitis AI Optimizer

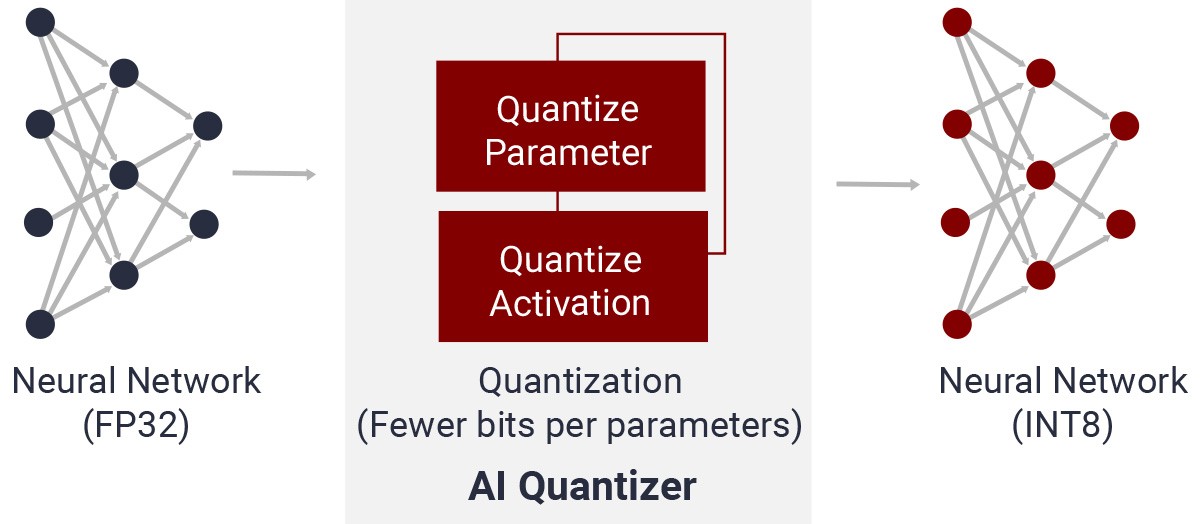

Figure 4 - Vitis AI Quantizer

AI Quantizer

A completed process of custom operator inspection, quantization, calibration, fine-tuning, and converting floating-point models into fixed-point models that requires less memory bandwidth - providing faster speed and higher computing efficiency.

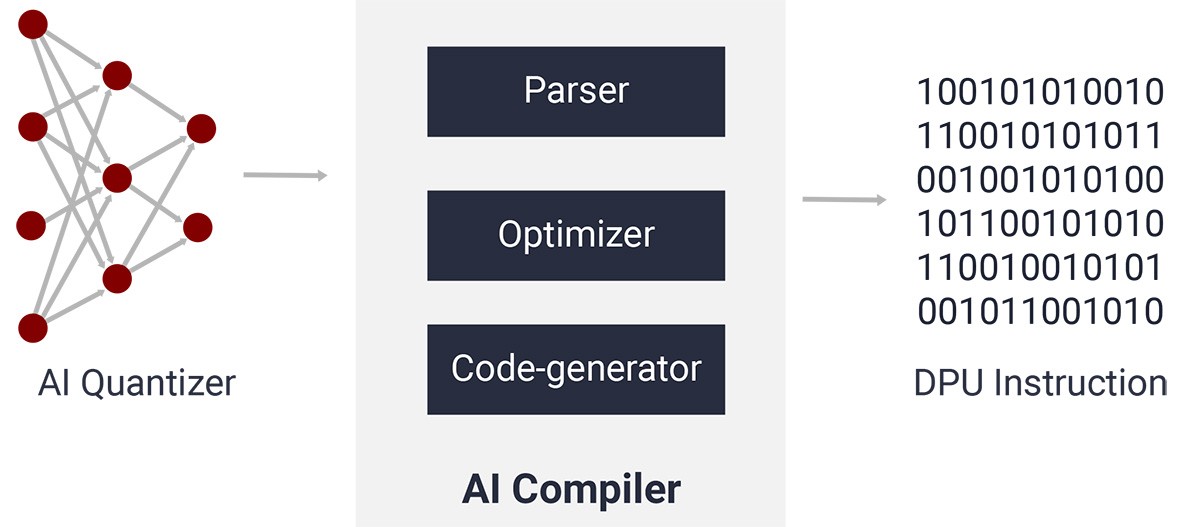

AI Compiler

The AI compiler maps the AI model to a highly efficient instruction set and data flow. It also performs sophisticated optimizations, such as layer fusion and instruction scheduling, and reuses on-chip memory as much as possible.

Figure 5 - Vitis AI Compiler

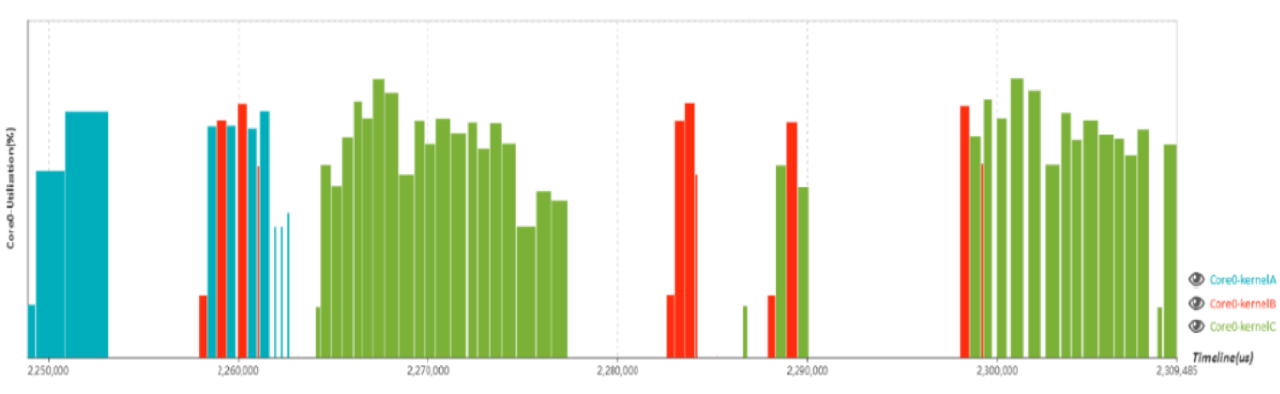

AI Profiler

The performance profiler allows programmers to perform in-depth analysis of the efficiency and utilization of the AI inference implementation.

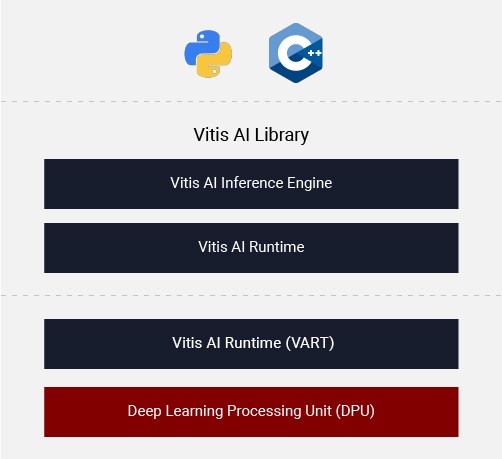

AI Library

The Vitis AI Library is a set of high-level libraries and APIs built for efficient AI inference with DPU cores. It is built based on the Vitis AI Runtime (VART) with unified APIs and provides easy-to-use interfaces for AI model deployment on AMD platforms.

Figure 7 - Vitis AI Library

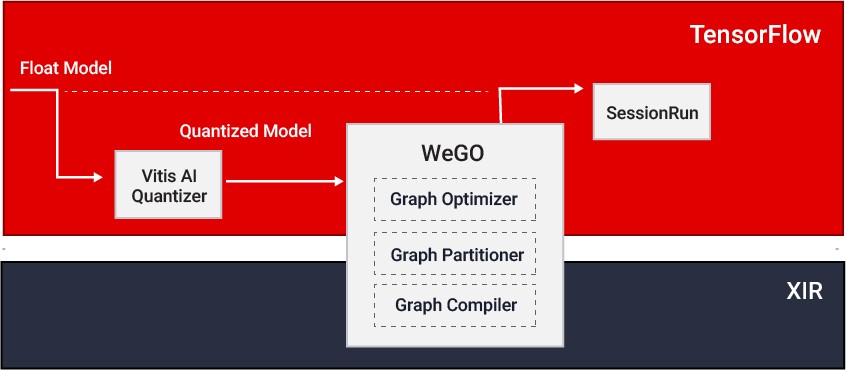

Figure 8 - Vitis AI Compiler

Whole Graphic Optimizer (WeGO)

The WeGO framework inference flow offers a straightforward path from training to inference by leveraging native TensorFlow or PyTorch frameworks to deploy DPU unsupported operators to the CPU—greatly speeding up model deployment and evaluation over cloud DPUs.

Deep-Learning Processor Unit (DPU)

The DPU is an adaptable domain-specific architecture (DSA) that matches the fast-evolving AI algorithms of CNN and Transformer-based models with the industry-leading performance found on AMD Adaptive SoCs, Alveo data center accelerator cards and select Ryzen AI powered PCs.

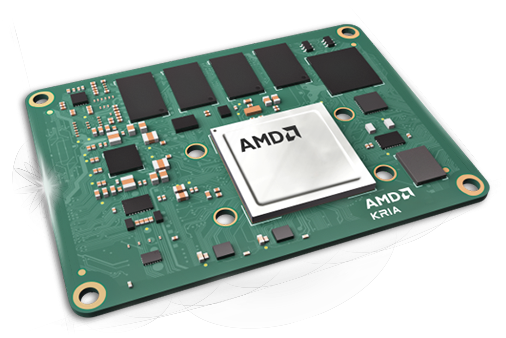

Edge Deployment

The Vitis™ AI sofware delivers powerful computing performance with the optimal algorithms for edge devices while allowing for flexibility in deployment with optimal power consumption. It brings higher computing performance for popular edge applications for automotive, industrial, medical, video analysis, and more.

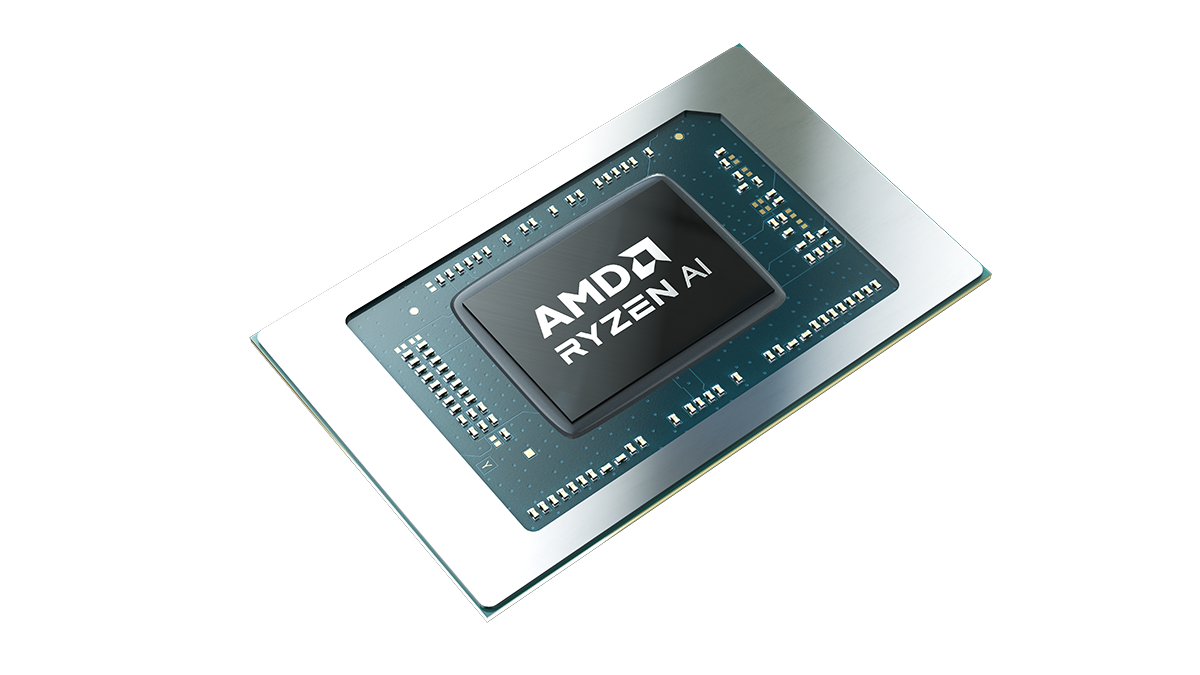

Client Deployment

AMD Ryzen™ 7040 Series mobile processors feature are built with AMD XDNA™ architecture , helping enable accelerated multitasking, increased productivity, efficiency, and advanced collaboration.

On-Premise Deployment

Empowered by the Vitis AI solution, Alveo™ data center accelerator cards offer competitive AI inference performance for different workloads on CNNs, RNNs, and NLPs. The out-of-the-box, on-premise AI solutions are designed to meet the needs of the ultra low-latency, higher throughput, and high flexibility requirements found in modern data centers—providing higher computing capabilities over CPUs, GPUs and lower TCO.

Cloud Deployment

Working with public cloud service providers such as AWS and VMAccel, AMD now offers remote access to FPGA and Versal™ adaptive SoC cloud instances for quickly getting started on model deployments—even without local hardware or software.

Vitis AI Platform Documentation

Extensive documentation support is available for developing with the Vitis™ AI platform on models, tools, deep learning processor units, etc.

Link to specific documents below, or visit the Documentation Portal to see all the Vitis AI Platform documents.

Empowering Autonomous Driving and ADAS Technologies

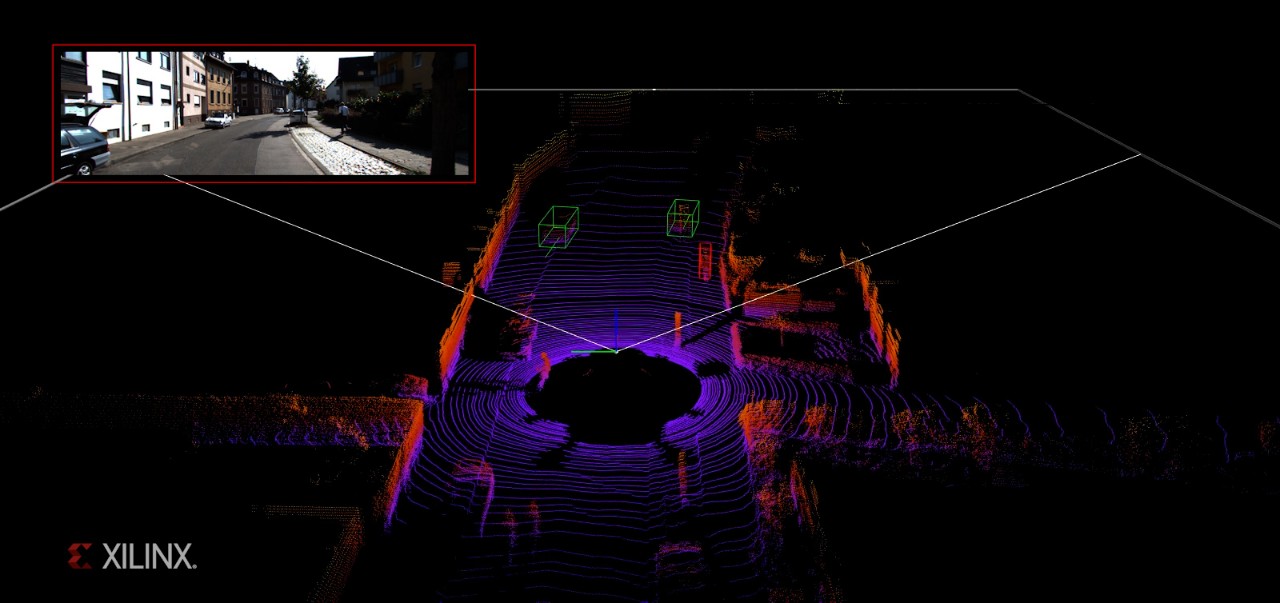

Real-Time Multi-Class 3D Object Detection

With Vitis™ AI, it is now possible to achieve real-time processing with the 3D perception AI algorithm on embedded platforms. The co-optimization from hardware and software speed up delivers leading performance of the state-of-art PointPillars model on Zynq™ UltraScale+™ MPSoC.

Ultra-Low Latency Application for Autonomous Driving

Latency determines the decision-making for autonomous driving cars when running at high speeds and encountering obstacles. With an innovated domain-specific accelerator and software optimization, Vitis AI empowers autonomous driving vehicles to process deep learning algorithms with ultra-low latency and higher performance.

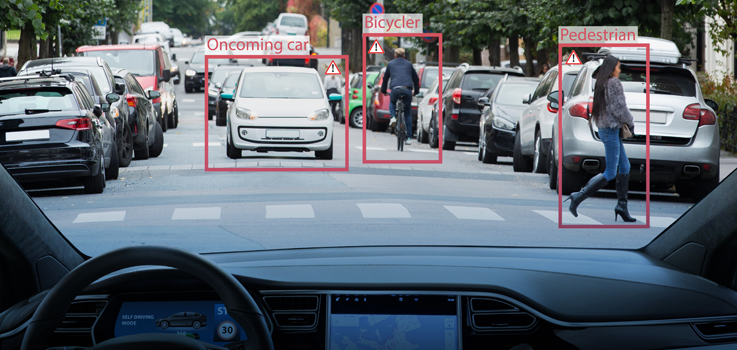

Object Detection & Segmentation

With strong scalability and adaptability to fit across many low-end to high-end ADAS products, Vitis AI delivers industry-leading performance supporting popular AI algorithms for object detection, lane detection and segmentation in the front ADAS, and In-cabin or surround-view systems.

Making Cities Smarter and Safer

Video Analytics

Cities are increasingly employing intelligence-based systems at the edge point and cloud end. The massive data generated every day requires a powerful end-to-end AI analytics system in order to quickly detect and process objects, traffic, and face behavior. This adds valuable insight to each frame from edge to cloud.

Learn more about AMD in Machine & Computer Vision >

Transforming the Power of AI to Improve Health

AI in Imaging, Diagnostics and Clinical Equipment

Vitis AI offers powerful tools and IPs to uncover and identify hidden patterns from medical image data to help fight against disease and improve health.

Learn more about AMD in Healthcare AI >

AI On-Premise and in the Data Center

Datacenter Acceleration

Learn More about AMD in Data Center >

Featured Videos

All Videos

Getting Started

Developing for Embedded Platforms

Step 1: Set up your hardware platform

Step 2: Download and install the Vitis AI™ environment from GitHub

Step 3: Run Vitis AI environment examples with VART and the AI Library

Step 4: Access tutorials, videos, and more

For more on Embedded Getting Started, click the button below:

Developing for Ryzen™ AI

Developing Ryzen AI Software

- Installation

- Development flow

- Getting Started: Examples, Demos and Tutorials

For more on Ryzen AI Getting Started Material, click the link below: