Migrating from CUDA to Vitis

Introduction

CUDA (Compute Unified Device Architecture) is a parallel computing platform and application programming language specifically designed to target Nvidia GPUs. It is a software abstraction to code “hardware parallel” and in this brief article, we contrast the CUDA programming abstraction to what Vitis offers.

CUDA and Vitis

CUDA

In a nutshell, designing with CUDA amounts to coding for a large vectorizable loopi in a bottom-up fashion, where each vector element is coded as a kernel whose code implements one thread. In the CUDA programming abstraction, the series of sequential instructions in the thread are assigned to blocks, which themselves scale up in a grid. The CUDA compiler then manages the threads within a given block and organizes them as physical groups called warps. A CUDA block is assigned to a streaming multiprocessor (SM) optionally offering access to its high-speed shared memory.

The designer assesses how the application can take advantage of the GPU hardware and tweaks the CUDA code to create as many concurrent threads as possible while also optimizing memory accesses.

Often the designer would simply reuse a library that may already address the type of compute workload needed.

Vitis

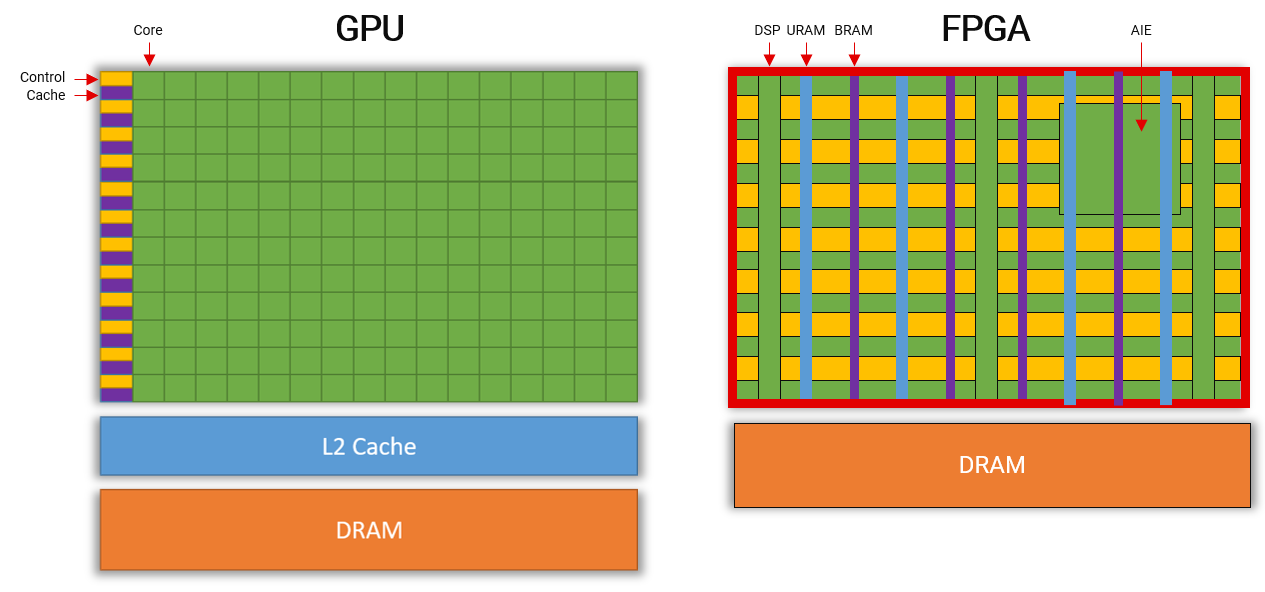

Unlike a GPU, an FPGA (Field Programmable Gate Array) is not pre-compartmented as an array of streaming multiprocessors, it is instead a whole slate of logic with columns of memories and math compute elements (the DSP blocks). In addition, dedicated computational blocks (the AIE engines) are found in the Versal AI Core series devices.

Fig 1: Contrasting a GPU and a FPGA

To map high-level C++ abstractions in Vitis, the C++ kernels come in two main forms:

High-level synthesis (HLS) code for the array of logic and columns of memories. The code can run directly on a CPU for emulation and pragmas combined with specific coding styles describe various levels of parallelism at the instruction or task level. This style of coding C++ with pragma is closer to OpenMP than CUDA

Graph-based descriptions for AI Engines kernels with scalar or vector processing. This abstraction is specifically designed for the AIE engines found in the Versal AI Core series.

Unlike CUDA, Vitis makes no assumption of a main vectorized loop in Vitis, it is only one of the many possible micro-code architectures that can be expressed. In fact, an equivalent to the SIMT structure of the GPU could be coded as an unrolled loop calling a function representing the thread in its body. But C++ and HLS can implement several additional micro-code architectures other than top unrolled loops and can mix serial and parallel code within the same kernel.

The Vitis kernel code on the right loosely describes an equivalent of CUDA threads in a C++ function “thread.”

The top main loop is explicitly unrolled via the UNROLL pragma.

...

// Compute vector elements

void thread(...) {

#pragma HLS INLINE off

...;

}

...

// TOP loop for full vector

loop: for (...) {

#pragma HLS UNROLL

thread(...);

}

C++ HLS micro-architecture for threads…

Vitis also offers numerous open-source libraries to get the best performance on many common functions.

Contrasting Programming Concepts for CUDA and Vitis

There are several differences between CUDA and Vitis, the programming model and the terminology are different. The following table discusses a few concepts to highlight and explain the differences

|

CUDA for the GPU |

C++ for the FPGA |

Philosophy, general approach |

Application is treated as a large vectorized loop, each thread computes an element of the vector. |

Several micro-architectures with recommended coding styles for task parallelism. The application is C++ with compiler directives (pragmas) that control parallelism, interfaces, data type precision, and more… |

Kernel connectivity, interfaces, |

Fixed connectivity, managed automatically. |

Parallel or optionally serial in the case of kernel to kernel connections. User customizes the bus sizes. |

C++ compatibility |

The CUDA code cannot run directly on the CPU but can be emulated. |

The kernel code can be compiled by GCC to run directly on the CPU (possibly including path to special libraries for streams and arbitrary precision types) |

Grid/Block/Thread |

Threads are computed in parallel as part of a vectorized loop. |

User chooses the level of parallelism, controls the interface width and tweaks data type precision wherever possible. |

Barrier synchronization |

Threads might run independently of each other and cause race conditions. |

The C for high-level synthesis guarantees correctness to the original C++ description and would require the user to add barriers in the code. |

| Data types | Native hardware support for floating-point operations (32-bit). Supports double and reduced precision floating point (16-bit). Supports select integer bit width. Supports mixed-precision Tensor Core TF32. |

Native hardware support for floating-point operations (32-bit). Supports double and reduced precision floating point (16-bit). Supports select integer bit width. Supports mixed-precision Tensor Core TF32. |

Memory hierarchy |

Register file |

No limits on “registers” (up to full device utilization) |

Streams |

System level queue of work. |

In Vitis, streams refer to serial connections. |

Streaming multi-processors (SMs) |

The CUDA blocks of threads are scheduled onto fixed hardware processors (the SMs). |

No fixed hardware processors to run the Vitis kernels. During kernel compilation, the necessary logic to sequence and schedule the different tasks is created. |

Optimizing kernels with CUDA and Vitis

In CUDA, the developer seeks to exert as many computations as possible while trying to take advantage of the high-performance shared memories in the SMs (Steaming Multiprocessor). Nvidia profiler tools such as Nsight Compute and Nsight Systems help pinpoint performance bottlenecks and provide suggestions.

Amongst the varied factors that can affect performance in a GPU:

Low occupancy, the kernels might only use a fraction of GPU device hardware capabilities

Slow off-chip memory accesses

Limited amount of shared memory

Register scarcity which would reduce the numbers of threads scheduled at a given time

Target performance difference as the number of SMs varies depending on the GPU targeted

In Vitis, the developer decides on a micro-architecture to code the type of parallelism that fits best the application. In some cases, memory storage can be avoided altogether by using serial interfaces between kernels and processing the data on the fly, without the need to store it.

Some of the advantages of designing C++ kernels in Vitis:

The exact kernel latency and amount of parallelism are known after kernel compilation and are unaffected by runtime

Available on-chip fast memory is larger than shared memory on SMs

No limitations on “registers,” Vitis kernel can access resources on the whole chip for a given kernel

Example design, a dot product kernel

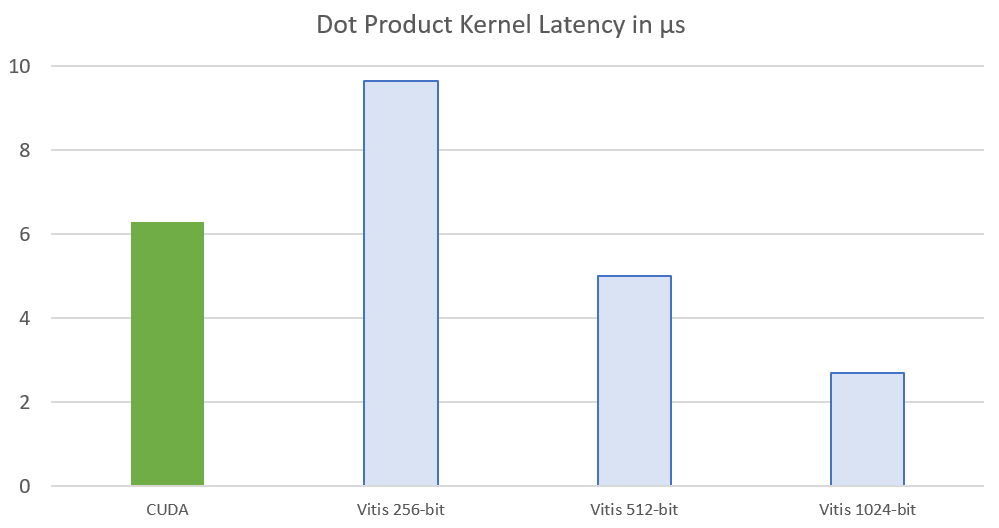

We now dig into an example of a vector dot product (a.k.a. inner product). The computation consists of two input vectors, whose elements are multiplied one by one and then all summed to produce a single scalar output. For example, if we take the dot product of two four-element vectors, we will get the following equation:

Overall, the strategy to implement such dot product in CUDA is as follows:

- Compute products in many blocks and store them into the block (SM) shared memory for each of the blocks

- Reduce locally, sum at the block level all the partial products in each shared memory used

- Let the host finish the reduction with the assumption that this number should be small

For the Vitis kernel code, using high-level synthesis, describe the algorithm very simply but make sure that:

- Interface is sufficiently wide to match the platform capabilities: this implies providing hints to the compiler to make multiple inputs available in parallel

- Unroll the loop to replicate hardware so that it can consume all the elements at once and match these replications to the number of elements available

- Turn off the floating-point precision check as the associative laws of algebra do not strictly hold for floating-point numbersiii (The CUDA code is also providing a different precision compared to the original C++ used for the Vitis kernel)

Below, on the left is kernel code used in CUDA codeiv

__global__ void dot( float *a, float *b, float *c ) {

__shared__ float cache[threadsPerBlock];

int tid = threadIdx.x + blockIdx.x * blockDim.x;

int cacheIndex = threadIdx.x;

float temp = 0;

while (tid < N) {

temp += a[tid] * b[tid];

tid += blockDim.x * gridDim.x;

}

// set the cache values

cache[cacheIndex] = temp;

// synchronize threads in this block

__syncthreads();

// for reductions, threadsPerBlock must be

// a power of 2 because of the following code

int i = blockDim.x/2;

while (i != 0) {

if (cacheIndex < i)

cache[cacheIndex] += cache[cacheIndex + i];

__syncthreads();

i /= 2;

}

if (cacheIndex == 0)

c[blockIdx.x] = cache[0];

}

...

// finish up on the CPU side

c = 0;

for (int i=0; i<blocksPerGrid; i++) {

c += partial_c[i];

}

...

Below is C++ for the Vitis compiler:

void dot( float *a, float *b, float *c )

{

float temp=0;

for(int i=0; i<N;i++)

temp += a[i] * b[i];

*c = temp;

}

Below is the full Vitis kernel code with the pragmas added into the source code:

const int N = 33 * 1024;

void dot( float *a, float *b, float *c ) {

#pragma HLS INTERFACE mode=m_axi bundle=gmema max_widen_bitwidth=256 port=a

#pragma HLS INTERFACE mode=m_axi bundle=gmemb max_widen_bitwidth=256 port=b

#pragma HLS INTERFACE mode=m_axi bundle=gmemc port=c

float temp=0;

for(int i=0; i<N;i++)

#pragma HLS UNROLL factor=8

temp += a[i] * b[i];

*c = temp;

}

Vitis C++ kernel code for dot product with pragmas (256-bit interface for an unroll of 8)

The interface pragma in the code above specifies a memory-mapped AXI (denoted by the mode m_axi) interface for each port. We specify different bundles for our inputs a and b to guarantee each has its own AXI memory controller which is imperative to get the best performance. Then, the max_widen_bitwidth modifier directs the compiler to use larger inputs than the implied 32-bit of the float data type. As a result, the compiler will assign (256/32) 8 inputs in parallel for each input a and b.

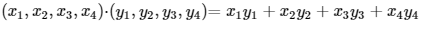

The unroll pragma in the loop replicates the body of the loop to match the number of inputs and creates the hardware for eight multiply-accumulate (MAC) functions that are subsequently reduced (see fig 2 below).

Fig 2: Replications of ports and MAC operators with final reduction.

When targeting Versal, the MAC is mapped onto a single DSP block as they support single-precision floating-point natively in that architecture.

In terms of performance, the amount of parallelism, hence the latency of the computation, can be dialed in via the interface bit width pragma we discussed above and the matching factor for the unroll pragma.

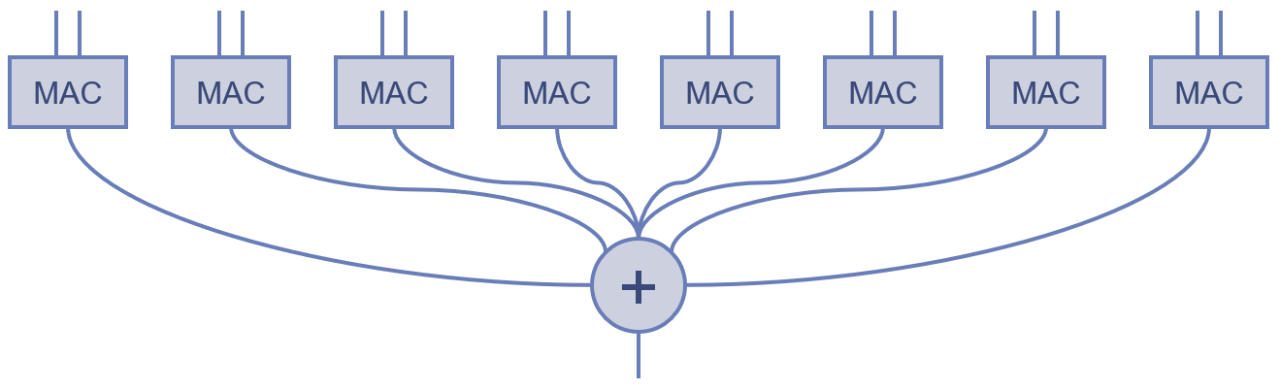

In the comparison below, we use the default settings of the CUDA code example with vector length of 33k single-precision floating-point numbers and a grid size of 32 for a block size of 256 (8,192 threads). We target a Tesla V100 and measure the kernel latency with Nsight Compute.

For the Vitis kernel, we use 3 different kernel interface sizes for 8, 16 and 32 MACs, the kernel interface being respectively of bit width 256, 512 and 1,024 and we target a Versal device running at 454 MHz (2.2ns period). We obtained the following results:

Fig 3:Performance Comparison (kernel latency in microseconds).

We see that the Vitis kernel latency scales and as expected performance doubles as we also double the number of MAC units and unroll the loop accordingly.

With 32 MACs and an interface of 1024-bit, the Vitis kernel runs 2.3x faster than the CUDA kernel while also completing the reduction which is not the case for the CUDA kernel example considered as it only returns the partial products of each block and leaves it to the host to finalize the computation.

Conclusion

There are sizable differences between CUDA and Vitis. A CUDA application can’t be automatically ported to Vitis but the developer will still find it rather simple to express parallelism for the Xilinx devices.

Using C++ has its advantages over CUDA, it can run on the CPU for emulation and dependencies made obvious by the compiler. Many of the complexities of CUDA thread management and cache memory limitations don’t apply in Vitis.

[1] Hennessy, John L. & Patterson, David A. “Computer Architecture: A Quantitative Approach” 5th Edition.

[1] Vitis Libraires. https://www.xilinx.com/products/design-tools/vitis/vitis-libraries.html

[1] Oracle corporation documentation. “What Every Computer Scientist Should Know About Floating-Point Arithmetic”: https://docs.oracle.com/cd/E19957-01/806-3568/ncg_goldberg.html

[1] Sanders, Jason & Kandrot, Edward “CUDA by Example”. https://github.com/CodedK/CUDA-by-Example-source-code-for-the-book-s-examples-

About Frédéric Rivoallon

Frédéric Rivoallon is a member of the software marketing team in San Jose, CA and is the product manager for AMD HLS, besides high-level synthesis Frédéric also has expertise in compute acceleration with AMD devices, RTL synthesis, and timing closure. Past experiences taught him video compression and board design.

See all of Frédéric Rivoallon's articles