Bring Video Analytics to a new level with Xilinx’s VMSS and VCK5000 Platform

What is VMSS?

Xilinx has developed Alveo platforms based on Xilinx's cutting-edge FPGA acceleration technology, it is PCIe form factor acceleration cards with software level programmability and gates level acceleration.

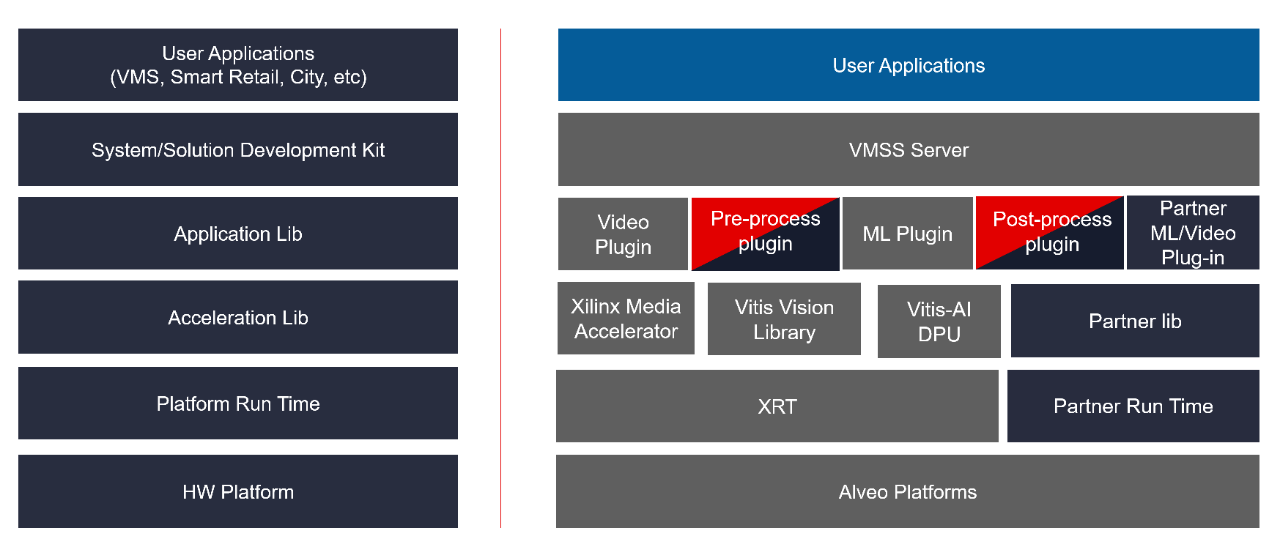

In addition, Xilinx has developed a software framework called VMSS – Video machine learning stream server. It sits between Alveo platforms and User Applications, enabling Alveo accelerated video analytics applications by providing an adaptable and ease of use development environment with Xilinx runtime, Xilinx optimized acceleration libraries, plug-ins that connect the accelerated library to video analytics applications, and the server framework that manage the entire smart video analytics pipelines and server applications. The customer doesn’t need to know how to program our FPGA on the Alveo cards. VMSS is a configurable development framework that is designed to ingest and process video streams and produce ML inference results in a completely customizable, optimized, and accelerated pipeline.

As shown in figure 1, VMSS has fully customizable plug-in-based architecture, which provides many out-of-box FPGA accelerated video processes and machine learning plug-ins. In addition, developers and partners can develop their own plug-ins and attach them to the VMSS pipeline, and enable their customized video analytic dataflow and acceleration easily.

Figure 1 Xilinx Video Machine Learning Streaming Server (VMSS) Development Environment

VMSS Differentiations

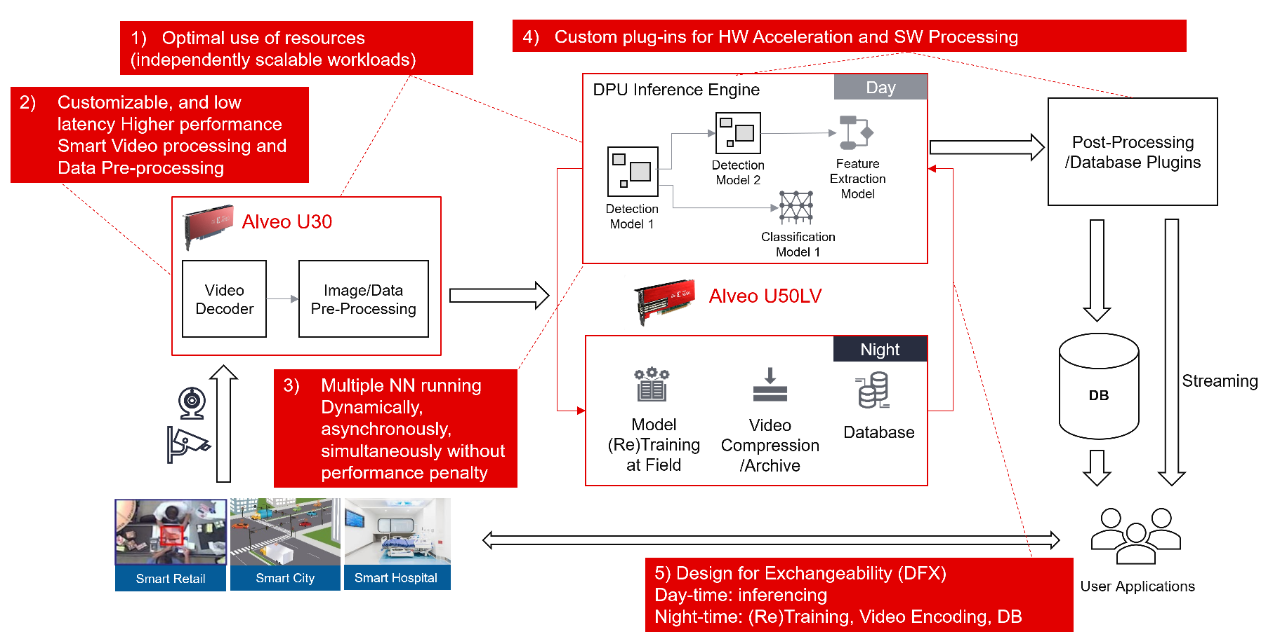

There are five major differentiations Xilinx’s VMSS can bring to accelerate the video analytics applications as shown in Figure 2.

1. Different Video Analytics applications and use cases could have their unique workload ratio between video processing and machine learning analysis. In some use cases, each video stream may require machine learning analysis that involves multiple computationally intensive deep learning models. On the other hand, there could be some use cases where there is a large number of videos streams to be monitored in real-time, but only require simple ML analysis for each stream. In VMSS, accelerated plug-ins with different acceleration functions can be assigned to different Alveo accelerator cards. For example, U30 is used by video decoder and pre-process plugins to handle video decoding, frame buffering, image crop, scaling and etc., while U50LV is loaded with highly optimized machine learning engines, e.g. DPU, and serve ML plug-in for accelerated inference. By separating the video/image processing and machine learning workloads to different accelerators, optimal resource utilization and workload balance can be reached for each specific use case by configuring the systems with just the workload required amount of U30 and U50LV cards accordingly.

Figure 2 VMSS Processing Pipeline and Differentiators

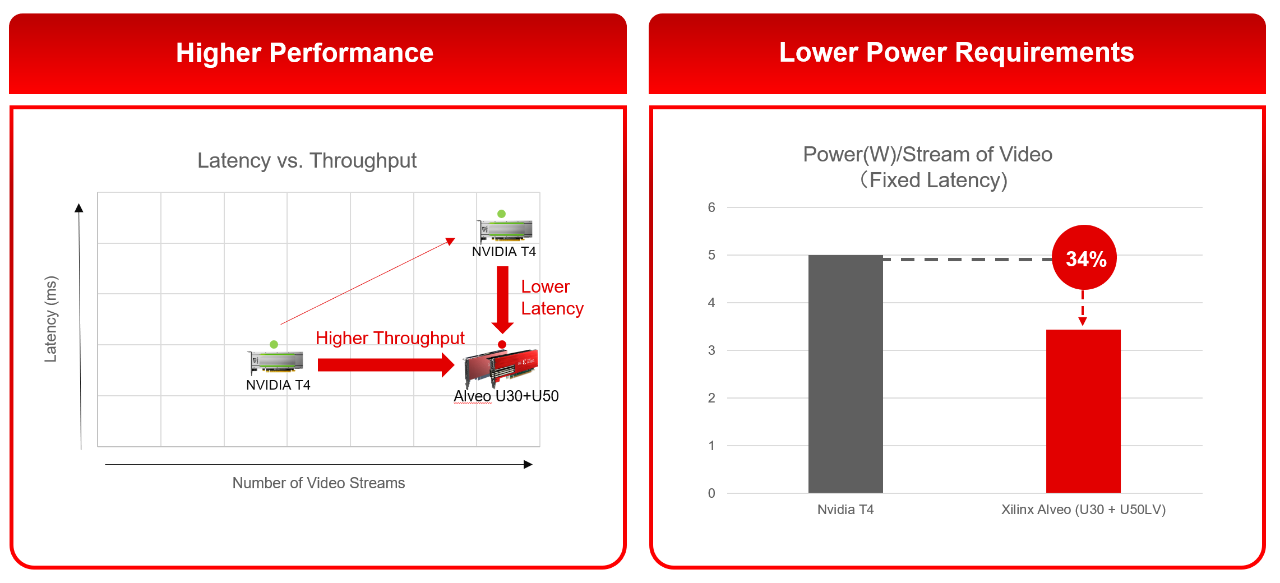

2. FPGAs have advantages in accelerating real-time applications with both high throughput and low latency. Unlike GPUs or other accelerators designed to accelerate with massive data parallelism, FPGAs processes data independently in parallel processing pipelines and deliver high throughput performance while keeping the latency at a deterministic low level. VMSS is optimized for delivering low latency and high throughput end-to-end video analytics pipeline. Performance benchmarking results (Figure 3) shows that VMSS with U30 + U50LV combination can handle over two times the video streams than Nvidia T4 running on the same video analytics workloads, and consumes 34% less power per video stream compared to GPU solution.

Figure 3 Video Analytics Performance Comparison

- Video input: 1080p 30fps H.264 GOP=3, Primary detector model: TinyYoloV3, Secondary classifier model: ResNet50

- GPU System: Intel Xeon Gold 6244, Nvidia Tesla T4 GPU card, Ubuntu 18.04, DeepStream 5.0, TensorRT 7.0, CUDA 10.2

- FPGA System: AMD EPYC 7542, Xilinx Alveo U30 + Xilinx Alveo U50LV, Ubuntu 18.04, VMSS v1.2

3. In today’s typical smart world applications, such as smart retail, smart city, smart healthcare and etc., multiple neural network models are usually used in the same application use cases and required to be run simultaneously for different stages and types of analysis in real-time. VMSS with Xilinx’s Vitis-AI-based machine learning plugin is designed to support multiple models running dynamically and asynchronously on DPU to support single or multiple use cases without performance penalties brought by frequently switching models in real-time. To further improve the ease of use for developers to custom analytics pipeline, VMSS allows users to define processing graphs of neural network models that support cascading models, branching process of models, and other topologies simply through a graph configuration file.

4. Along with the highly optimized default plugins, VMSS also provides an open infrastructure that enables developers to add their own custom plugins to fill any gap between the default VMSS plugin functions and the specialized applications. Partners can also easily add their own accelerated components to VMSS with custom plugins, only need to follow a few intuitive and simple interface guidelines. A typical machine learning plug-in using partners’ own inference acceleration engine on FPGAs can be quickly developed in terms of weeks.

5. One more important deafferentations between FPGAs and other accelerators are adaptability and hardware reconfigurability. Not only for accelerating video analytics, but the same Alveo accelerator card can also be used in many other acceleration tasks as well. For example, while a retail store is closed for business hours, Alveo cards can be used to accelerate the retraining of machine learning models to include retail times newly introduced to the store at the local store independently, or be sued for compressing the archived videos using a higher standard codec to save local or cloud video archive storage space. Being able to use Alveo accelerators 24/7 for different types of acceleration requirements brings business the maximum return on the investment of Xilinx’s Alveo platforms.

Enhanced VMSS with VCK5000 Platform

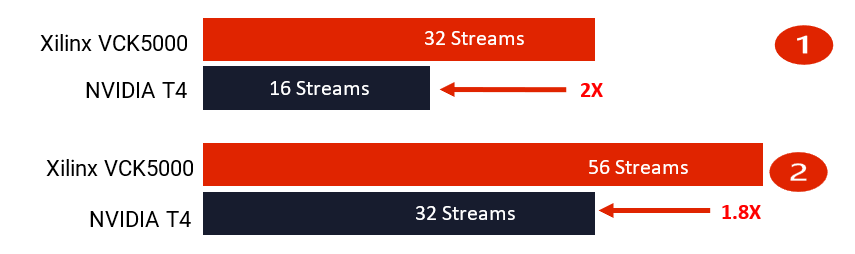

With the latest addition to support Xilinx’s AIE-powered Versal VCK5000 platform, VMSS reaches the next level of capability, e.g. being able to support more streams and handle more complex machine learning models with high throughput.

For example, VMSS running with VCK5000 is able to handle 32 streams of pipelined YoloV3 detector + ResNet18 classifier video analytics, and 56 streams if the detector is replaced with TinyYoloV3. Compared to GPU solutions, e.g. T4, we are able to reach 2X processing throughput for the same end-2-end video analytics pipelines.

1. AI model:YoloV3+: Resnet 18 HW: 1x Xilinx VCK5000 vs 1 x Nvidia T4 l 2. AI model: Resnet 50 & TinyYoloV3 HW: 1x Xilinx VCK5000 vs 1 x Nvidia T4

Figure 4 Video Analytics Performance with VCK5000

To learn more details about VMSS and typical example applications of VMSS, please find our technical overview here: https://www.xilinx.com/products/acceleration-solutions/aupera/vmss-technical-overview.html

About Yao Fu

Dr. Yao Fu is the System Architect in AMD Data Center Group leading the efforts on machine learning and AI solutions. He has led the development of various foundational methodologies optimizing FPGA based deep learning solutions with higher throughput, lower latency and better power efficiency. The INT8 optimization methodology Dr. Fu developed is widely adopted today by most of the deep learning inference solutions on UltraScale and UltraScale+ devices.

See all Yao Fu's articles